Meta Properties founder and chief executive Mark Zuckerberg on Wednesday held an all-hands presentation with the team of the company’s artificial intelligence group, including deep learning pioneer Yann LeCun, the topic, “Building for the metaverse with AI.”

The presentation is live on the Facebook page of the AI Lab until roughly 11 am, Pacific Time.

The AI Lab’s proposition, displayed on its Facebook home page, is “Through the power of artificial intelligence, we will enable a world where people can easily share, create, and connect physically and virtually, with anyone, anywhere.”

Zuckerberg called artificial intelligence “perhaps the most important foundational technology of our time.”

Zuckerberg said the company is unveiling something called “Project CAIRaoke,” which Zuckerberg described as “a fully end-to-end neural model for building on device assistants. It combines the approach behind BlenderBot with the latest in conversational AI to deliver better dialog capabilities.”

He said the company’s focus in AI for The Metaverse consists of two areas of AI research “Egocentric perception,” and “a whole new class of generative AI models,” as Zuckerberg put it.

Meta’s explanation of serf-supervised learning.

Zuckerberg announced “no language left behind,” which would results in a “single model that can understand hundreds of languages.”

He also announced what he called “a universal speech translator” that would offer instantaneous translation. “AI is going to deliver that within our lifetimes,” said Zuckerberg.

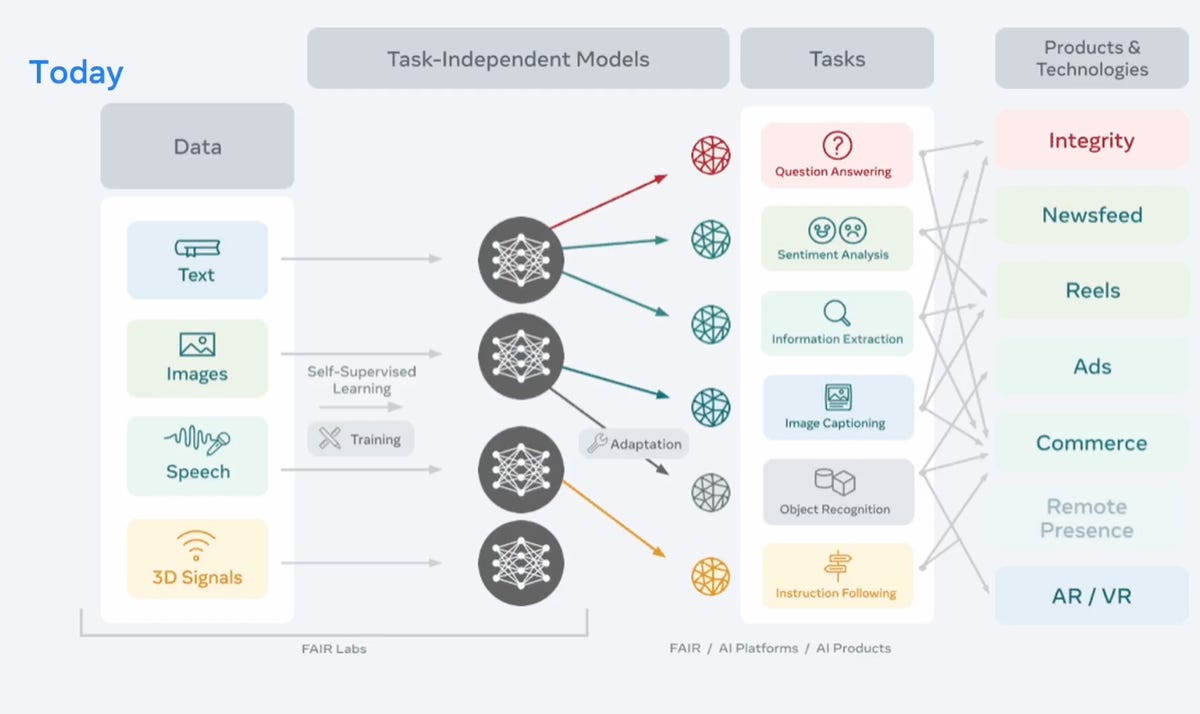

The day’s presentation was focused on the theme of “self-self supervised learning,” where a neural network is developed by amassing vastly more data without carefully applied labels. Lecun explained to IEEE Spectrum’s Eliza Strickland the virtues of self-supervised learning in an email exchanged published Tuesday.

Following Zuckerberg’s introduction, Jérôme Pesenti, the leader of the AI team, explained that self-supervised models are increasingly catching up with supervised neural network training, not only in language models but in areas such as image recognition.

In a prepared video, the Project CAIRaoke was presented as a smarter intelligent assistant that would properly understand spoken commands. The video made fund of the common pitfalls of assistant technology, such as when it totally misunderstands the request spoken by a user, which it termed “level 2,” or “mechanical” assistants that are useful for “single shot interactions,” but not for “multi-turn interactions.”

Meta, the presentation said, will produce “supercharged” assistants.

In the latter part of the of the day’s presentation, LeCun was joined by fellow deep learning pioneer, Yoshua Bengio, in a chat hosted by Lex Fridman, for a discussion of the path to “human-level intelligence.”

Bengio opined “we are still far from human-level AI.” Bengio observed humans “attend” to novel situations, and take time to reason about a problem. “We can take inspiration about how brains do it,” he said, including “conscious processing.”

Bengio used the example of a person learning to drive on the left side of the road for the first time when visiting London. Much of the learning is made possible, he said, by humans having a lot of information that is consistent and familiar, and being able to re-train habits to fit the novel elements.

LeCun, while concurring with Bengio about the problems to be tackled, said “I’m more focused on solutions.” He cited human and animal learning via small numbers of examples. “What kind of learning do humans and animals use that we are not able to reproduce in machines. That’s the big question I’m asking myself,” said LeCun.

From left, host Lex Fridman, Yann LeCun, Yoshua Bengio.

Meta“I think what’s missing is the ability of humans and animals to learn how the world works, to have a world model,” said LeCun. He referred to Bengio’s example of left-side driving. “The rules of physics don’t change,” such as turning the steering wheel of a car. “How we get machines to learn world models, how the world works, mostly by observation, to figure out very basic things about the world.”

“Common sense is a collection of world models,” argued LeCun.