Using LangChain, programmers have been able to combine ultrasound imaging for things such as breast cancer diagnosis with a ChatGPT-style natural language prompt. Korea Advanced Institute of Science and Technology

The generative AI movement of OpenAI’s ChatGPT and its derivatives is perhaps best known for bad rap lyrics and automated programming assistance. But a new open-source framework riding on top of large language models is bringing a more practical focus to GenAI.

LangChain, just over a year old, is what you could think of as a gentle introduction to programming AI agents through a very simple set of libraries riding on top of GenAI models. The technology is supported by a venture-backed startup of the same name, which offers a server platform for commercial deployment of apps constructed with LangChain.

Also: Generative AI is a developer’s delight. Now, let’s find some other use cases

The crux of LangChain is that it combines a large language model prompt with various external resources. In this way, it can grab data from a database, for example, and pass language model output to an application, get that app’s output and pass it back to the language model, and on and on.

The framework thus allows for the chaining together of resources, where each resource becomes an agent of sorts, handling a piece of the problem within the context of the language model and the prompt.

Already, there are intriguing examples of practical uses from different disciplines.

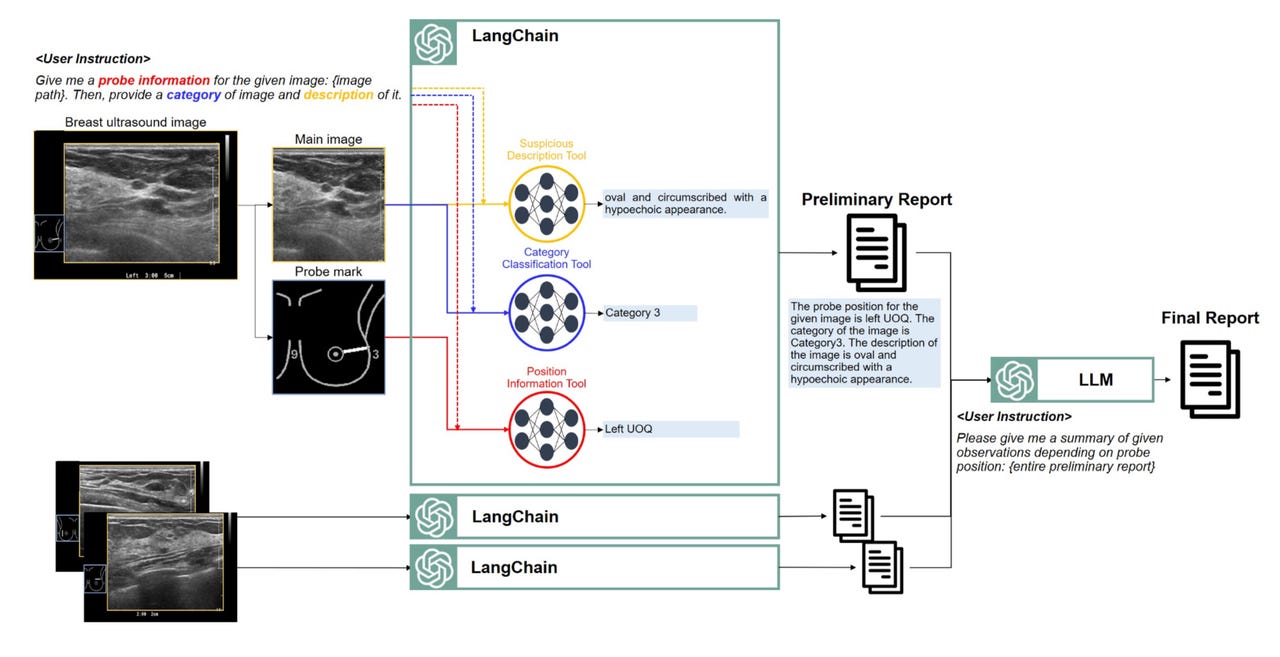

For example, using LangChain, programmers have been able to combine ultrasound imaging for things such as breast cancer diagnosis with a ChatGPT-style natural language prompt. A radiologist can invoke the computer as an analytical assistant with a phrase such as, “Please give me a summary of given observations depending on probe position” in a breast ultrasound image.

An interesting aspect of the program, built by Jaeyoung Huh and colleagues at the Korea Advanced Institute of Science and Technology, is that it brings together three different neural networks of a widely used variety — ResNet-50 — which is the classic vision neural network that excels at image classification.

Each of the three neural networks is trained separately to perform one task, such as identifying a suspicious form in an ultrasound image, classifying that form, and picking out the location of the form in the body.

The purpose of the LangChain is to wrap these three networks within natural language commands, such as, “give me a probe information for the given image,” and then, “give me a summary of given observations.”

Also: Pinecone’s CEO is on a quest to give AI something like knowledge

At the simplest level, then, LangChain can be a way to create a user-friendly front-end to AI, the kind long dreamed of by specialists in fields such as medical AI who sought to create a doctor’s assistant that would respond to spoken commands.

A goal of some LangChain efforts is to try to eliminate GenAI’s infamous hallucinations — the programs’ propensity to confidently assert false information — by grounding the technology in authoritative external sources. A group at consulting firm Accenture, led by Sohini Roychowdhury, describes a system for making financial predictions via a “finance chatbot.”

The system takes cells from a spreadsheet and converts them into natural-language statements about the data, which can then be searched over to find a sentence that matches a question.

Here’s how it works: A user prompts the language model with a natural-language question such as, “How are my sales doing?” The prompt is fed into a template that generates a more precise prompt to the language model. That prompt might include more of the detailed question words than a person would think about, to produce a better prompt.

The improved prompt triggers a keyword search, and that search picks out which of the sentences — built from tabular data — point to the most relevant data in the table (e.g., sales, profit).

Once the relevant data is retrieved, a second set of templates helps the chatbot formulate a response to the query using the sentences from the tabular data in a chat response.

Also: Microsoft’s GitHub Copilot pursues the absolute ‘time to value’ of AI in programming

Roychowdhury and team don’t manage to eliminate hallucinations. Instead, they designed a “confidence” scoring mechanism by which the chatbot checks its answers against the question, sees how well they match, and then assigns a confidence score of high, medium or low for its answer.

“The confidence score tells the user to assert caution while making key decisions using medium to low confidence responses,” explains Roychowdhury. “The confidence score further helps ascertain which user queries need to be further refined for reliability.”

Programmers are finding that a LangChain can be a way to automate some extremely mundane tasks. One example is checking employees’ Web usage to make sure they are not browsing illicit Web sites. A document describing an “acceptable use policy” for a corporation is uploaded into what’s called a vector database, a special kind of database that can compare text strings from, say, a URL to a collection of text strings in a document to see if there’s a match.

When a person types a URL into a browser, both the URL of the site and the summary of the site’s content can be automatically compared to the policy document in the vector database to see if the site’s content matches any prohibited topics. The programmer can automate the comparison with a simple text prompt, asking, “Does anything in this site match prohibited items?”

Also: I took this free AI course for developers in one weekend and highly recommend it

Such an example makes clear that large language models and LangChain are moving beyond individuals’ queries. They are becoming a way for programmers to use natural language commands to integrate the various tools at their disposal for functions that are behind the scenes.

LangChain is not the only framework for compiling workflows that have an agent quality, and more such frameworks are being created, including Microsoft’s Semantic Kernel and the open-source LlamaIndex, which builds upon LangChain.

A group of scholars at Stanford, UC Berkeley and Carnegie Mellon, along with collaborators from private industry, in October introduced what they call DSPy, which is a programming approach that replaces hand-coded natural language prompts with functional descriptions and can in turn automatically generate prompts. The functional descriptions can be very broad, such as, “consume questions and return answers.” DSPy features a compiler to optimize the flow of language models and supporting tools.

The DSPy effort is analogous, the authors note, to deep learning frameworks that have advanced neural networks via layers of abstraction, including Torch and Theano.

Also: 8 ways AI and 5G are pushing the boundaries of innovation together

The authors claim dramatic improvements in quality over having a person manually craft a prompt in each instance. “Without hand-crafted prompts and within minutes to tens of minutes of compiling, compositions of DSPy modules can raise the quality of simple programs from 33% to 82%,” they write.

It’s very early days in the GenAI framework game, and you can expect many more layers of abstraction on top of, underneath, and around LangChain in the coming year.

Artificial Intelligence