Generative artificial intelligence (AI) image creators are increasingly popular, but their use has also sparked debates about copyrighted material in training datasets. Now new information about Adobe Firefly, the company’s answer to generative AI tools like Midjourney and DALL-E, complicates the conversation further.

Like other image generators, Firefly creates visual content, vector images, text effects, and more from user-inputted text prompts. But Adobe has positioned Firefly as the outlier in the space due to its dataset, which the company touts as a quality control.

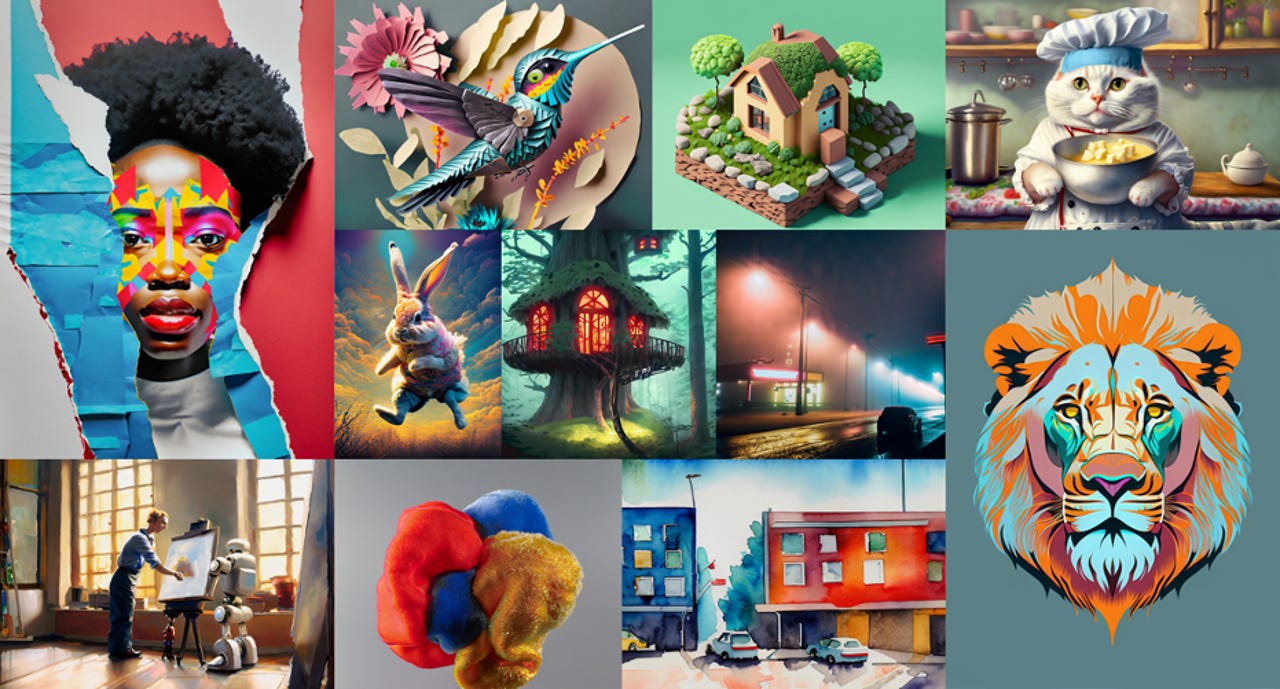

Also: The best AI image generators to try right now

The large language models (LLMs) that undergird image generators ingest billions of images to work. Properly licensing that much content is expensive, and computing at this scale is already pricey, meaning companies are incentivized to scrape free content from the internet without crediting or compensating creators. Popular text generators, including ChatGPT, are also trained this way, with language samples scraped from the web.

AI companies are increasingly under scrutiny for gathering data in this manner. Stable Diffusion and Midjourney have been sued by artists and organizations like Getty over improper licensing. In December, The New York Times sued OpenAI and Microsoft for using its work to train ChatGPT.

Also: Adobe is buying videos to train its new AI tool, but is it paying enough?

Adobe’s website says Firefly is “commercially safe” compared to competitor products because it was trained on “licensed content, such as Adobe Stock, and public domain content where the copyright has expired.” Adobe even has a compensation plan for certain Stock contributors whose content was used to train the first iteration of the tool.

However, Bloomberg reported Friday that about 5% of Firefly’s training data is AI-generated, created by competitors like Midjourney. The content entered Firefly’s dataset because creators could submit AI-generated images to Adobe’s Stock marketplace, which they were compensated for as part of Adobe’s program.

For Adobe to use synthetic content after lauding its dataset as stricter than its competitors seems counterintuitive. Although not legally required to publicize training data, this detail sheds doubt on the validity of Adobe’s quality claims, especially considering those images were created using tools now under fire for copyright.

Also: This new AI tool from Adobe makes generating the images you need even simpler

Despite the revelation, Adobe maintains that it quality controls its dataset. “Every image submitted to Adobe Stock, including a very small subset of images generated with AI, goes through a rigorous moderation process to ensure it does not include IP, trademarks, recognizable characters or logos, or reference artists’ names,” an Adobe spokesperson told Bloomberg.

The discovery points to a discrepancy between public messaging and internal communications. Bloomberg found that an Artist Relations manager for Adobe Stock posted in a Discord community that Firefly would use a new training database free of generative AI once it left beta. But after the public release of the tool, another Adobe employee said on Discord that AI-generated imagery “enhances our dataset training model, and we decided to include this content for the commercially released version of Firefly.”

The company seems to be drawing a line between synthetic content more generally and specific elements that need to be licensed, but the territory is murky. Whether Firefly users will encounter copyright issues in the future remains to be seen. Given the nascent nature of generative image generation, it’s safe to say there’s a certain level of legal risk in creating content with any of these tools.