New rules for online political advertising will be put forward by European Union lawmakers next year, with the aim of boosting transparency around sponsored political content.

The Commission said today that it wants citizens, civil society and responsible authorities to be able to clearly see the source and purpose of political advertising they’re exposed to online.

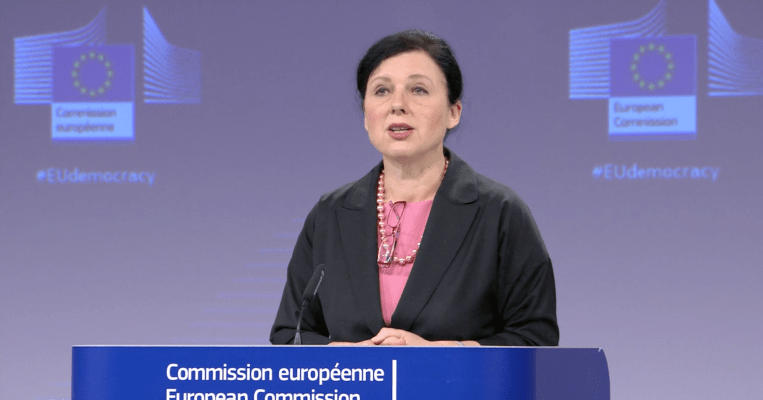

“We are convinced that people must know why they are seeing an ad, who paid for it, how much, what microtargeting criteria were used,” said commissioner Vera Jourova, speaking during a press briefing at the unveiling of a Democratic Action Plan.

“New technologies should be tools for emancipation — not for manipulation,” she added.

In the plan, the Commission says the forthcoming political ads transparency proposal will “target the sponsors of paid content and production/distribution channels, including online platforms, advertisers and political consultancies, clarifying their respective responsibilities and providing legal certainty”.

“The initiative will determine which actors and what type of sponsored content fall within the scope of enhanced transparency requirements. It will support accountability and enable monitoring and enforcement of relevant rules, audits and access to non-personal data, and facilitate due diligence,” it adds.

It wants the new rules in place sufficiently ahead of the May 2024 European Parliament elections — with the values and transparency commissioner confirming the legislative initiative is planned for Q3 2021.

Democracy Action Plan

The step is being taken as part of the wider Democracy Action Plan containing a package of measures intended to bolster free and fair elections across the EU, strengthen media pluralism and boost media literacy over the next four years of the Commission’s mandate.

It’s the Commission’s response to rising concerns that election rules have not kept pace with digital developments, including the spread of online disinformation — creating vulnerabilities for democratic values and public trust.

The worry is that long-standing processes are being outgunned by powerful digital advertising tools, operating non-transparently and fatted up on masses of big personal data.

“The rapid growth of online campaigning and online platforms has… opened up new vulnerabilities and made it more difficult to maintain the integrity of elections, ensure a free and plural media, and protect the democratic process from disinformation and other manipulation,” the Commission writes in the plan, noting too that digitalisation has also helped dark money flow unaccountably into the coffers of political actors.

Other issues of concern it highlights include “cyber attacks targeting election infrastructure; journalists facing online harassment and hate speech; coordinated disinformation campaigns spreading false and polarising messages rapidly through social media; and the amplifying role played by the use of opaque algorithms controlled by widely used communication platforms”.

During today’s press briefing Jourova said she doesn’t want European elections to be “a competition of dirty methods”, adding: “We saw enough with the Cambridge Analytica scandal or the Brexit referendum.”

However the Commission is not going as far as proposing a ban on political microtargeting — at least not yet.

In the near term its focus will be on limiting use in a political context — such as limiting the targeting criteria that can be used. (Aka: “Promoting political ideas is not the same as promoting products,” as Jourova put it.)

The Commission writes that it will look at “further restricting micro-targeting and psychological profiling in the political context”.

“Certain specific obligations could be proportionately imposed on online intermediaries, advertising service providers and other actors, depending on their scale and impact (such as for labelling, record-keeping, disclosure requirements, transparency of price paid, and targeting and amplification criteria),” it suggests. “Further provisions could provide for specific engagement with supervisory authorities, and to enable co-regulatory codes and professional standards.”

The plan acknowledges that microtargeting and behavioral advertising makes it harder to hold political actors to account — and that such tools and techniques can be “misused to direct divisive and polarising narratives”.

It goes on to note that the personal data of citizens which powers such manipulative microtargeting may also have been “improperly obtained”.

This is a key acknowledgement that plenty is rotten in the current state of adtech — as European privacy and legal experts have warned for years. Most recently warning that EU data protection rules that were updated in 2018 are simply not being enforced in this area.

The UK’s ICO, for example, is facing legal action over regulatory inaction against unlawful adtech. (Ironically enough, back in 2018, its commissioner produced a report warning democracy is being disrupted by shady exploitation of personal data combined with social media platforms’ ad-targeting techniques.)

The Commission has picked up on these concerns. Yet its strategy for fixing them is less clear.

“There is a clear need for more transparency in political advertising and communication, and the commercial activities surrounding. Stronger enforcement and compliance with the General Data Protection Regulation (GDPR) rules is of utmost importance,” it writes — reinforcing a finding this summer, in its two-year GDPR review, when it acknowledged that the regulation’s impact has been impeded by a lack of uniformly vigorous enforcement.

The high level message from the Commission now is that ‘GDPR enforcement is vital for democracy.

But it’s national data supervisors which are responsibility for enforcement. So unless that enforcement gap can be closed it’s not clear how the Commission’s action plan can fully deliver the hoped for democratic resilience. Media literacy is a worthy goal but a long slow road vs the real-time potency of big-data fuelled adtech tools.

“On the Cambridge Analytica case I referred to it because we do not want the method when the political marketing uses the privileged availability or possession of the private data of people [without their consent],” said Jourova during a Q&A with press, acknowledging the weakness of GDPR enforcement.

“[After the scandal] we said that we are relieved that after GDPR came into force we are protected against this kind of practice — that people have to give consent and be aware of that — but we see that it might be a weak measure only to rely on consent or leave it for the citizens to give consent.”

Jourova described the Cambridge Analytica scandal as “an eye-opening moment for all of us”.

“Enforcement of privacy rules is not sufficient — that’s why we are coming in the European Democracy Action Plan with the vision for the next year to come with the rules for political advertising, where we are seriously considering to limit the microtargeting as a method which is used for the promotion of political powers, political parties or political individuals,” she added.

The Commission says its legislative proposal on the transparency of political content will complement broader rules on online advertising that will be set out in the Digital Services Act (DSA) package — due to be presented later this month (setting out a suite of responsibilities for platforms). So the full detail of how it proposes to regulate online advertising also remains to be seen.

Tougher measures to tackle disinformation

Another major focus for the Democracy Action Plan is tackling the spread of online disinformation.

There are now clear-cut risks in the public health sphere as a result of the coronavirus pandemic, with concerns that disinformation could undermine COVID-19 vaccination programs. And EU lawmakers’ concerns over the issue look to have been accelerated by the coronavirus pandemic.

On disinformation, the Commission says it be overhauling its current (self-regulatory) approach to tackling online disinformation — aka the Code of Practice on disinformation, launched in 2018 with a handful of tech industry signatories — with platform giants set to face increased pressure from Brussels to identify and prevent co-ordinated manipulation via a planned upgrade to a co-regulatory framework of “obligations and accountability”, as it puts it.

There will clearly also be interplay with the DSA — given it will be setting horizontal accountability rules for platforms. But the beefed up disinformation code is intended to sit alongside that and/or plug the gap until the DSA comes into force (not likely for “years”, following the usual EU co-legislative process, per Jourova).

“We will not regulate on removal of disputed content,” she emphasized on the plan to beef up the disinformation code. “We do not want to create a ministry of truth. Freedom of speech is essential and I will not support any solution that undermines it. But we also cannot have our societies manipulated if there are organized structures aimed at sewing mistrust, undermining democratic stability and so we would be naive to let this happen. And we need to respond with resolve.”

“The worrying disinformation trend, as well all know, is on COVID-19 vaccines,” she added. “We need to support the vaccine strategy by an efficient fight against disinformation.”

Asked how the Commission will ensure platforms take the required actions under the new code, Jourova suggested the DSA is likely to leave it to Member States to decide which authorities will be responsible for enforcing future platform accountability rules.

The DSA will focus on the issue of “increased accountability and obligations to adopt risk mitigating measures”, said also said, saying the disinformation code (or a similar arrangement) will be classed as a risk mitigating measure — encouraging platforms and other actors to get on board.

“We are already intensively cooperating with the big platforms,” she added, responding to a question about whether the Commission had left it to late to tackle the threat posed by COVID-19 vaccine disinformation. “We are not going to wait for the upgraded code of practice because we already have a very clear agreement with the platforms that they will continue doing what they have already started doing in summer or in spring.”

Platforms are already promoting fact-based, authoritative health information to counter COVID-19 disinformation, she added.

“As for the vaccination I already alerted Google and Facebook that we want to intensify this work. That we are planning and already working on the communications strategy to promote vaccination as the reliable — maybe the only reliable — method to get rid of COVID-19,” she also said, adding that this work is “in full swing”.

But Jourova emphasized that the incoming upgrade to the code of practice will bring more requirements — including around algorithmic accountability.

“We need to know better how platforms prioritize who sees what and why?” she said. “Also there must be clear rules how researchers can update relevant data. Also the measures to reduce monetization of disinformation. Fourth, I want to see better standards on cooperation with fact-checkers. Right now the picture is very mixed and we want to see a more systematic approach to that.”

The code must also include “clearer and better” ways to deal with manipulation related to the use of bots and fake accounts, she added.

The new code of practice on disinformation is expected to be finalized after the new year.

Current signatories include TikTok, Facebook, Google, Twitter and Mozilla.