Googling your symptoms isn’t always the best idea, but Google wants to change that and it’s starting with your skin.

The search giant has developed Derm Assist, a new web-based app that can identify skin conditions from a photo. Derm Assist was unveiled at Google I/O 2021, Google’s annual developer conference, but you can’t use it yet. Google is targeting for it to launch in the European Union by the end of this year.

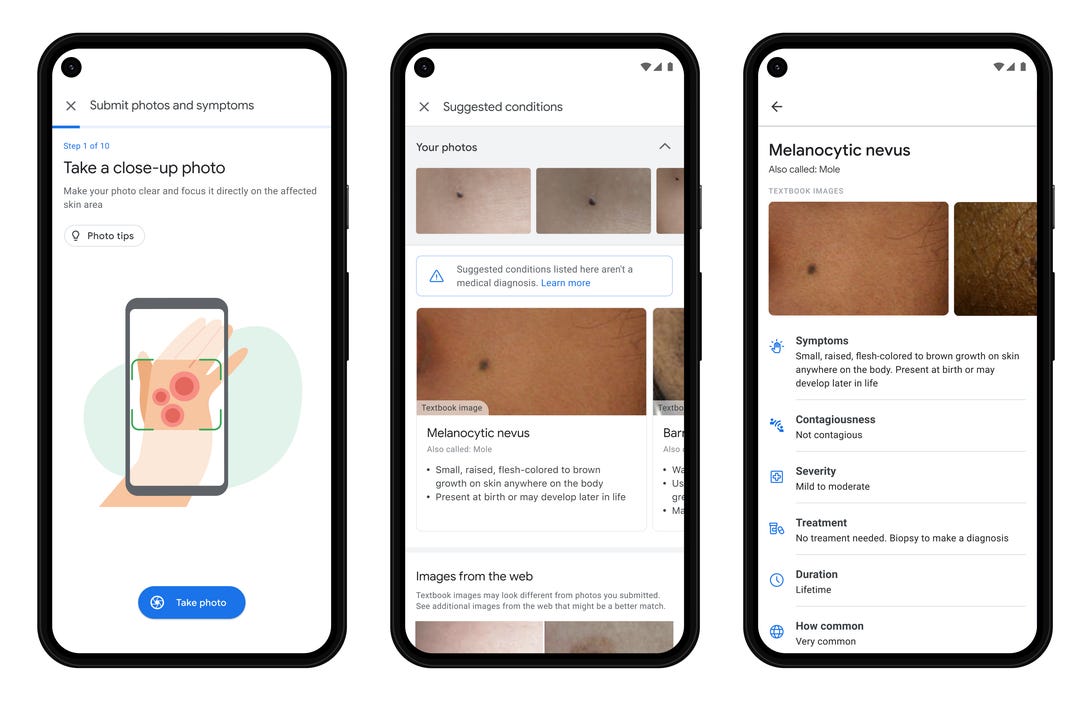

Here’s how it works. You spot a rash, lesion or strange-looking mole on your skin, snap a few photos of it, upload those pictures to Derm Assist. Google’s artificial intelligence and machine-learning capabilities analyze the photos and look for a match in a database of 288 skin conditions. It then presents a handful of possible skin conditions you might have with an accuracy rate of up to 97%, the company says.

Derm Assist only needs three photos to match you with a few possible skin conditions, but to get more precise results, you can fill out an optional questionnaire that goes into more detail about your skin condition.

Google makes it clear that this is not a diagnostic tool, but rather a way to help narrow down possible conditions so you can determine if you should see a doctor or just grab some cream from the drugstore.

Why focus on skin? Each year Google gets 10 billion searches about skin conditions, so the demand is there. Skin conditions can also be tricky to identify on your own, which is where the AI and machine learning comes in. Google already knows that people use its search engine to look up medical conditions, so the company is leaning into that.

Google is far from the first to do this, as apps like Aysa, Miiskin and SkinVision have been around for a few years. To set itself apart, Google intentionally made this as a web app, so that anyone with a phone that has a browser can use it. It’s also betting that its large library of skin conditions will give it an edge.

While building this app, the company made it a point to pull in diverse data to teach the AI and machine learning how to identify skin conditions on people of color, not just white skin. The hope is that people all over the world can use Derm Assist, regardless of skin color, and get information where medical care might be limited.

Derm Assist could be instrumental in helping people get the medical care they need for potentially serious skin conditions, but it does raise privacy concerns. After all, Google already knows so much about you, do you really want to hand over your medical data too?

Before you use the tool, you have to sign a consent form allowing Google to collect your personal data, but if you want to remove it from Derm Assist at any time, you can. You can also opt to donate your photos and data to Google, so it can use it to improve the tool and contribute to research studies.

Google also announced at I/O that it’s using AI technology to screen mammograms for potential issues in a research study. This is not something the average person can use, but it could help speed up the process to review a mammogram in the future.