BOSTON, Oct. 6, 2022 /PRNewswire/ — Human-centric manufacturing puts a high requirement for safety in production because machines work closely with human operators. Unlike traditional industrial robots that are physically separated from human operators, cobots are designed to work side by side with humans. This leads to a significantly higher requirement for safety.

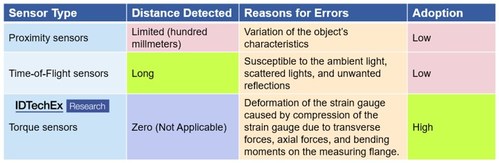

Torque sensors can be used for collision detection and force control. Human operators set the range of torque sensors in advance. When a collision occurs, the force/torque detected by sensors will exceed the pre-set range, triggering the emergency stop function. Thanks to the robustness and cheapness, torque sensors are collaborative robots’ most widely used sensory. However, torque sensors can only detect the torque change once a collision has happened, meaning they cannot make predictions and detect the upcoming collision beforehand.

Proximity sensors and visual sensors could be an ideal solution to this as they can detect and monitor the distance between human operators and machines. Proximity sensors are usually capacitive sensors. The capacitance of the air gap between sensors and humans will change when the human operators are in proximity to the cobotic arm. However, proximity sensors are relatively expensive, and many proximity sensors are needed to enable the cobot to detect human operators from every direction, which ultimately leads to a high cost and reduced affordability.

Aside from proximity sensors, vision systems also play an important role in detecting humans. As one of the drawbacks of capacitive proximity sensors, precisely detecting the distance between the sensors and the object is difficult because the measurement value varies depending on the object’s characteristics. In the meanwhile, the maximum distance detected by proximity sensors is limited, typically several hundred millimeters. To mitigate this issue, distance sensors can be used to help improve the safety and functionality of human-robot interaction (HRI). A time-of-flight (ToF) sensor is one of the distance sensors that can either be used on the robotic skin as a measurement for collision avoidance or as End-of-the-Arm tooling (EoAT). To detect distance, ToF sensors use photodiodes (single sensor elements or arrays) and active illumination. The reflected light waves from the obstruction are compared to the transmitted light waves, thereby determining the distance. This information is then used to construct a 3D model of the object. Simply put, the ToF sensors detect the distance to an object by measuring the time to receive light reflected from the object when it is irradiated with an infrared ray. The average error of distance measuring for ToF sensors is relatively small (less than 10%). However, despite its great promise in detecting distance, so far, IDTechEx did not see any commercialized ToF sensors being used for proximity sensing. IDTechEx believes that there are several reasons for this:

- Susceptible to the ambient light condition – since ToF essentially uses light, therefore, they can be affected by the ambient light condition.

- Scattered lights – In the event that the surfaces around the ToF sensors are very bright, they can scatter light waves significantly and result in unwanted reflections, which will ultimately affect the accuracy.

- Multiple reflections – when the ToF sensors are on corners or concave shapes, there might be unwanted reflections because the lights could be reflected multiple times, which distorts measurements.

IDTechEx noticed that most commercial cobots are still using torque sensors as the only sensory system for collision detection. However, with the increasing complexity of tasks and safety requirements, some companies have begun to integrate different sensors and apply sensor fusion. Sensor fusion is the process of combining sensor data or data derived from disparate sources such that the resulting information has less uncertainty than would be possible when these sources were used individually. In other words, by integrating the signals with sensor fusion, the resulting model is more accurate because it balances the strengths of the different sensors. Sensor fusion enables tight coupling between multiple sensors and their associated algorithms.

In summary, despite working closely with human operators, the sensors and algorithms mentioned above ensure a relatively safe HRI. Many end-users have not adopted some of the expensive sensors, such as proximity sensors, because of their high costs and relatively limited uses in practice. However, IDTechEx’s recent research for their report “Collaborative Robots (Cobots) 2023-2043: Technologies, Players & Markets“, believes that with the increasing multi-tasking ability of cobots and safety requirements, more sensors will likely be integrated into the cobot system.

For more information on this report, please visit www.IDTechEx.com/Cobots, or for the full portfolio of robotics research available from IDTechEx please visit www.IDTechEx.com/Research/Robotics.

About IDTechEx

IDTechEx guides your strategic business decisions through its Research, Subscription and Consultancy products, helping you profit from emerging technologies. For more information, contact [email protected] or visit www.IDTechEx.com.

Images download:

https://www.dropbox.com/sh/1cicsqnkr0qu0xb/AACxQmj7CtGEwwa3jZKXuOUia?dl=0

Media Contact:

Natalie Fifield

Digital Marketing Manager

[email protected]

+44(0)1223 812300

Social Media Links:

Twitter: https://www.twitter.com/IDTechEx

LinkedIn: https://www.linkedin.com/company/idtechex/

Facebook: https://www.facebook.com/IDTechExResearch

Photo: https://mma.prnewswire.com/media/1914107/Comparison_of_Safety_Sensors_for_Cobots.jpg

Logo: https://mma.prnewswire.com/media/478371/IDTechEx_Logo.jpg

SOURCE IDTechEx