Today, for the first time, we are including the prevalence of hate speech on Facebook as part of our quarterly Community Standards Enforcement Report.

How We Measure the Prevalence of Hate Speech

Prevalence estimates the percentage of times people see violating content on our platform. We calculate hate speech prevalence by selecting a sample of content seen on Facebook and then labeling how much of it violates our hate speech policies. Because hate speech depends on language and cultural context, we send these representative samples to reviewers across different languages and regions. Based on this methodology, we estimated the prevalence of hate speech from July 2020 to September 2020 was 0.10% to 0.11%. In other words, out of every 10,000 views of content on Facebook, 10 to 11 of them included hate speech.

We specifically measure how much harmful content may be seen on Facebook and Instagram because the amount of times content is seen is not evenly distributed. One piece of content could go viral and be seen by lots of people in a very short amount of time, whereas other content could be on the internet for a long time and only be seen by a handful of people.

We evaluate the effectiveness of our enforcement by trying to keep the prevalence of hate speech on our platform as low as possible, while minimizing mistakes in the content that we remove.

Defining and Addressing the Nuances of Hate Speech

Defining hate speech isn’t simple, as there are many differing opinions on what constitutes hate speech. Nuance, history, language, religion and changing cultural norms are all important factors to consider as we define our policies.

Based on input from a wide array of global experts and stakeholders, we define hate speech as anything that directly attacks people based on protected characteristics, including race, ethnicity, national origin, religious affiliation, sexual orientation, sex, gender, gender identity or serious disability or disease.

Over the last few years, we’ve expanded our policies to provide greater protections to people from different types of abuse. We’ve taken steps to combat white nationalism and white separatism; introduced new rules on content calling for violence against migrants; banned holocaust denial; and updated our policies to account for certain kinds of implicit hate speech, such as content depicting blackface, or stereotypes about Jewish people controlling the world.

Our goal is to remove hate speech any time we become aware of it, but we know we still have progress to make. Language continues to evolve, and a word that was not a slur yesterday may become one tomorrow. This means content enforcement is a delicate balance between making sure we don’t miss hate speech while not removing other forms of permissible speech. That’s why we prioritize the most critical content for people to review based on factors such as virality, severity of harm and likelihood of violation.

The Role of User Reports

When it comes to enforcement, we use a combination of user reports and technology to find hate speech on Facebook and Instagram. Every week, people across the world report millions of pieces of content to us that they believe violate our policies. These reports are reviewed in over 50 languages and give us insight into what’s happening on our platform so we can continue to refine our policies. We also strive to improve our reporting tools to make it easier for people to report content they think may violate our policies, but there are limitations with this. For example, in areas with lower digital literacy, people may be less aware of the option to file a user report. People also often report content they may dislike or disagree with, but that does not violate our policies. For example, users may report content from rival sports teams or spoilers for TV shows they haven’t watched yet. In addition, some content may be seen by a lot of people before it is reported, so we can’t rely on user reports alone.

We use AI to help prioritize content for review, so our reviewers can focus on content that poses the most harm, and spend more time training and measuring the quality of our automated systems.

The Role of AI Tools and Systems

We’ve developed AI tools and systems to proactively find and remove hate speech at scale. Because hate speech is so contextual, AI detection requires an ability to review posts holistically, with context, and across multiple languages.

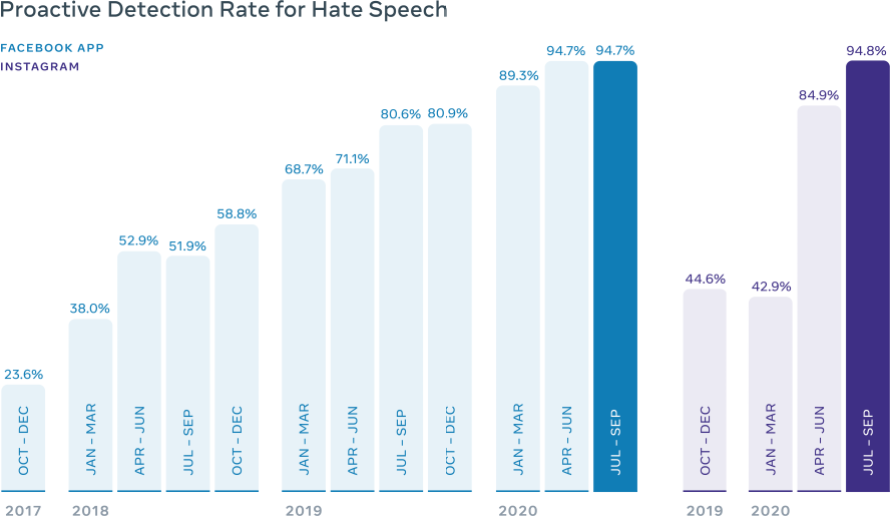

Advancements in AI technologies have allowed us to remove more hate speech from Facebook over time, and find more of it before users report it to us. When we first began reporting our metrics for hate speech, in Q4 of 2017, our proactive detection rate was 23.6%. This means that of the hate speech we removed, 23.6% of it was found before a user reported it to us. The remaining majority of it was removed after a user reported it. Today we proactively detect about 95% of hate speech content we remove. Whether content is proactively detected or reported by users, we often use AI to take action on the straightforward cases and prioritize the more nuanced cases, where context needs to be considered, for our reviewers.

We’ve invested billions of dollars in people and technology to enforce these rules, and we have more than 35,000 people working on safety and security at Facebook. As speech continues to evolve over time, we continue to revise our policies to reflect changing societal trends. But we believe decisions about free expression and safety shouldn’t be made by Facebook alone, so we continue to consult third-party experts in shaping our policies and enforcement tactics. And for difficult and significant content decisions, we can now refer cases to the Oversight Board for their independent review and binding decisions.

With the use of prevalence, user reports and AI, we’re working to keep Facebook and Instagram inclusive and safe places for everyone who uses them.

: