Using our apps to harm children is abhorrent and unacceptable. Our industry-leading efforts to combat child exploitation focus on preventing abuse, detecting and reporting content that violates our policies, and working with experts and authorities to keep children safe.

Today, we’re announcing new tools we’re testing to keep people from sharing content that victimizes children and recent improvements we’ve made to our detection and reporting tools.

Focusing on Prevention

To understand how and why people share child exploitative content on Facebook and Instagram, we conducted an in-depth analysis of the illegal child exploitative content we reported to the National Center for Missing and Exploited Children (NCMEC) in October and November of 2020. We found that more than 90% of this content was the same as or visually similar to previously reported content. And copies of just six videos were responsible for more than half of the child exploitative content we reported in that time period. While this data indicates that the number of pieces of content does not equal the number of victims, and that the same content, potentially slightly altered, is being shared repeatedly, one victim of this horrible crime is one too many.

The fact that only a few pieces of content were responsible for many reports suggests that a greater understanding of intent could help us prevent this revictimization. We worked with leading experts on child exploitation, including NCMEC, to develop a research-backed taxonomy to categorize a person’s apparent intent in sharing this content. Based on this taxonomy, we evaluated 150 accounts that we reported to NCMEC for uploading child exploitative content in July and August of 2020 and January 2021, and we estimate that more than 75% of these people did not exhibit malicious intent (i.e. did not intend to harm a child). Instead, they appeared to share for other reasons, such as outrage or in poor humor (i.e. a child’s genitals being bitten by an animal). While this study represents our best understanding, these findings should not be considered a precise measure of the child safety ecosystem. Our work to understand intent is ongoing.

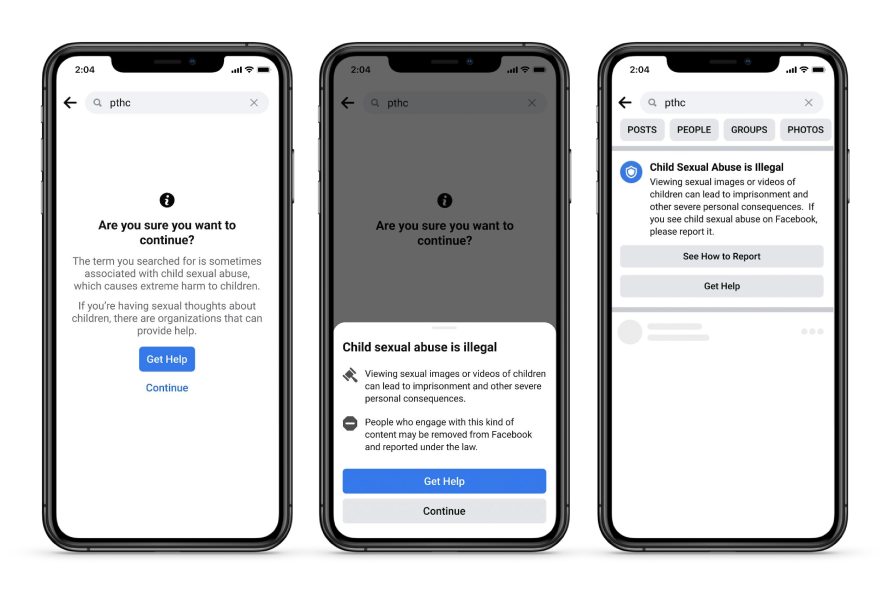

Based on our findings, we are developing targeted solutions, including new tools and policies to reduce the sharing of this type of content. We’ve started by testing two new tools — one aimed at the potentially malicious searching for this content and another aimed at the non-malicious sharing of this content. The first is a pop-up that is shown to people who search for terms on our apps associated with child exploitation. The pop-up offers ways to get help from offender diversion organizations and shares information about the consequences of viewing illegal content.

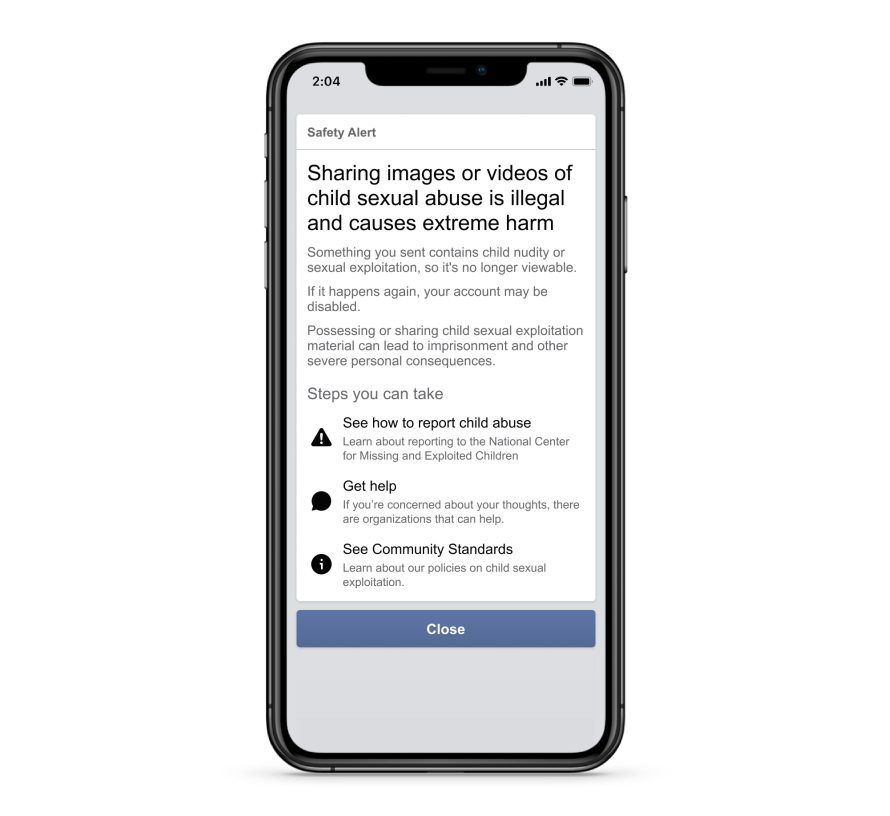

The second is a safety alert that informs people who have shared viral, meme child exploitative content about the harm it can cause and warns that it is against our policies and there are legal consequences for sharing this material. We share this safety alert in addition to removing the content, banking it and reporting it to NCMEC. Accounts that promote this content will be removed. We are using insights from this safety alert to help us identify behavioral signals of those who might be at risk of sharing this material, so we can also educate them on why it is harmful and encourage them not to share it on any surface — public or private.

Improving Our Detection Capabilities

For years, we’ve used technology to find child exploitative content and detect possible inappropriate interactions with children or child grooming. But we’ve expanded our work to detect and remove networks that violate our child exploitation policies, similar to our efforts against coordinated inauthentic behavior and dangerous organizations.

In addition, we’ve updated our child safety policies to clarify that we will remove Facebook profiles, Pages, groups and Instagram accounts that are dedicated to sharing otherwise innocent images of children with captions, hashtags or comments containing inappropriate signs of affection or commentary about the children depicted in the image. We’ve always removed content that explicitly sexualizes children, but content that isn’t explicit and doesn’t depict child nudity is harder to define. Under this new policy, while the images alone may not break our rules, the accompanying text can help us better determine whether the content is sexualizing children and if the associated profile, Page, group or account should be removed.

Updating Our Reporting Tools

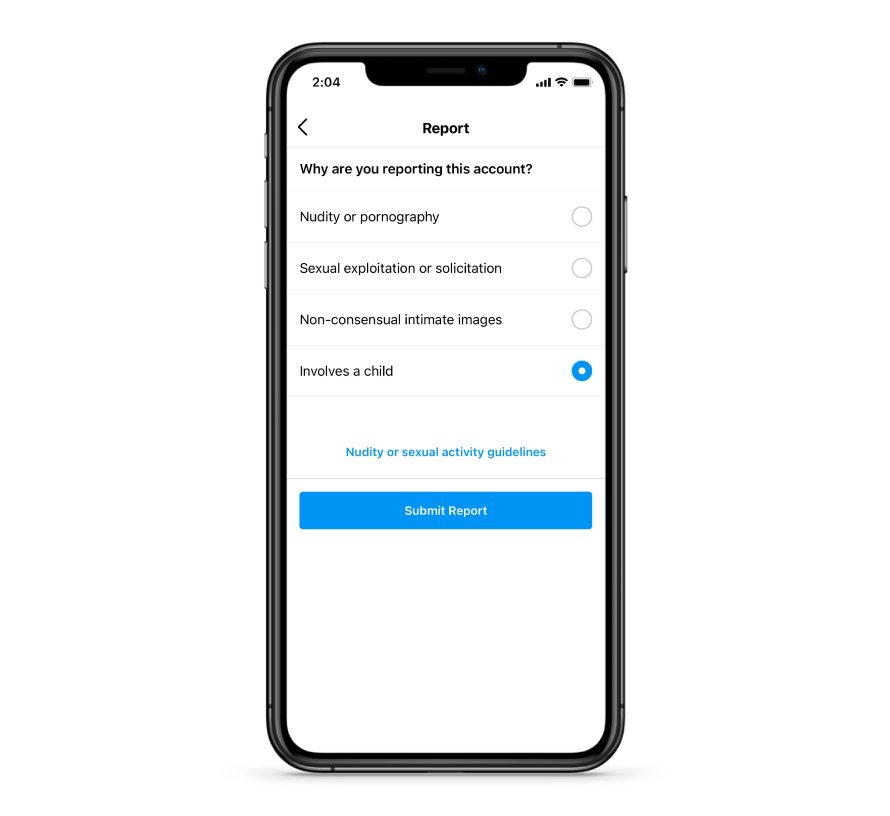

After consultations with child safety experts and organizations, we’ve made it easier to report content for violating our child exploitation policies. To do this, we added the option to choose “involves a child” under the “Nudity & Sexual Activity” category of reporting in more places on Facebook and Instagram. These reports will be prioritized for review. We also started using Google’s Content Safety API to help us better prioritize content that may contain child exploitation for our content reviewers to assess.

To learn more about our ongoing efforts to protect children in both public and private spaces on our apps, visit facebook.com/safety/onlinechildprotection.