Google’s researchers have demonstrated that, subject to certain conditions, error correction works on the company’s Sycamore quantum processor and can even scale exponentially, in what is yet another step towards building a fault-tolerant quantum computer.

The breakthrough is likely to catch the attention of scientists working on quantum error correction, a field that is concerned not with qubit counts but rather with qubit quality.

While increasing the number of qubits supported by quantum computers is often presented as the key factor in unlocking the unprecedented compute power of quantum technologies, equally as important is ensuring that those qubits behave in a way that allows for reliable, error-free results.

This is the idea that underpins the concept of a fault-tolerant quantum computer, but quantum error correction is still in very early stages. For now, scientists are still struggling to control and manipulate the few qubits that they are dealing with, due to the particles’ extremely unstable nature, meaning that quantum computations are still riddled with errors.

According to Google, most applications would call for error rates as low as 10^-15; in comparison, state-of-the-art quantum platforms currently have average error rates that are nearer 10^-3.

One solution consists of improving the physical stability of qubits, but scientists are increasingly prioritizing an alternative workaround approach, in which errors can be detected and corrected directly within the quantum processor.

Typically, this is done by distributing quantum data across many different qubits and using additional qubits to track that information, identifying and correcting errors as they go. The overall, error-corrected group of qubits forms a single cluster known as a “logical qubit”.

Called the stabilizer code, this approach essentially interlaces data qubits with measurement qubits that can turn undesired perturbations of the data qubit states into errors, which can then be compensated for thanks to specific software.

While the principles of stabilizer codes have been theoretically applied to different platforms, said Google, the method has not been proven to scale in large systems, nor has it been shown to withstand several rounds of error correction.

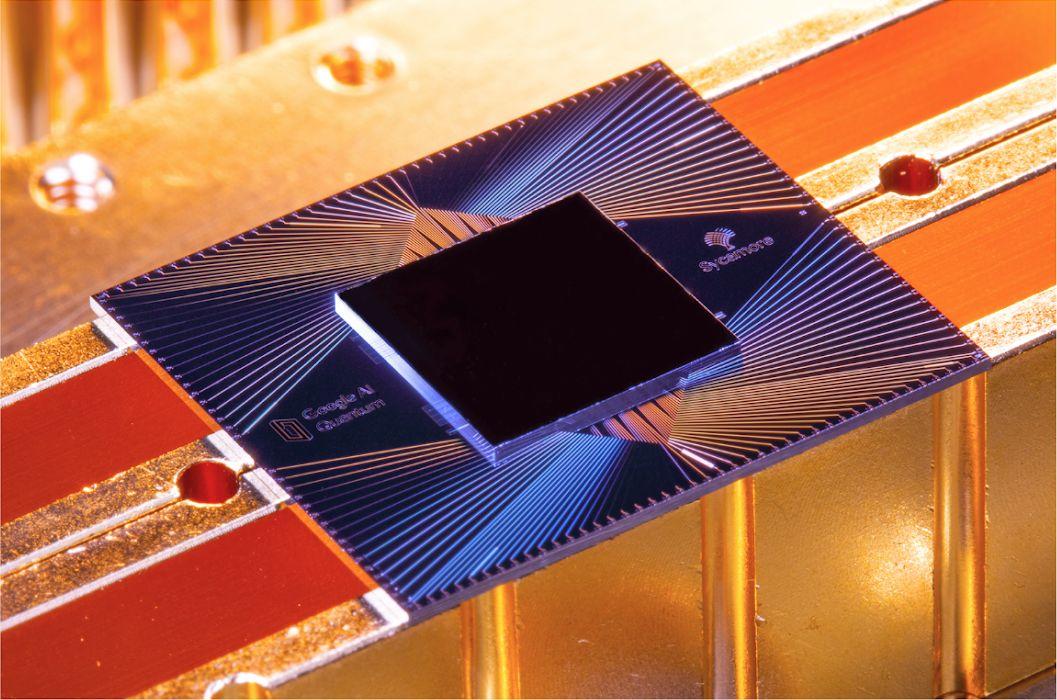

The advertising giant’s researchers set out to test stabilizer codes with the company’s Sycamore quantum processor, starting with a logical qubit cluster made up of five qubits linked in a one-dimensional chain. The qubits alternated between serving as data qubits and measurement qubits tasked with detecting errors.

Increasing the size of the cluster, found the researchers, exponentially reduced error. The team tested the method with various sizes of logical qubits, reaching a maximum cluster of 21 qubits, which was found to reduce logical error more than 100-fold compared to clusters made of only five qubits. In other words, the larger the logical qubit, the better errors can be corrected.

This is important because practical quantum computers are expected to require at least 1,000 error-correction qubits for each logical qubit. Proving that error correction methods can scale, therefore, is fundamental to the development of a useful quantum computer.

Google also found that the error suppression rate remained stable even after 50 rounds of error correction – a “key finding” for the feasibility of quantum error correction, said the company.

Of course, there are still huge limitations to the experiment. Current quantum computers can support less than 100 qubits – Sycamore, for example, has 54 qubits – meaning that it is impossible to test the method with the 1,000 qubits that would be necessary for practical applications.

And even if 21 qubits were sufficient to create a useful logical qubit, Google’s processor would only be capable of supporting two of those logical qubits, which is still far from enough to use in real-life applications. Google’s results, therefore, remain for now a proof-of-concept.

In addition, the scientists highlighted that qubits’ inherently high error rates are likely to become problematic. In the team’s experiments, 11% of the checks ended up detecting an error, meaning that error correction technologies will have to be incredibly efficient to catch and correct every perturbation in devices that have several thousands of times more qubits.

“These experimental demonstrations provide a foundation for building a scalable fault-tolerant quantum computer with superconducting qubits,” said the researchers. “Nevertheless, many challenges remain on the path towards scalable quantum error correction.”

It remains that Google’s findings have opened the door to more research and experiments in a field that is increasingly busy.

Earlier this year, for example, Amazon’s cloud subsidiary AWS released its first research paper detailing a new architecture for a quantum computer with the objective of setting a new standard for error correction.

AWS’s method relies on a similar approach to Google’s, but is coupled with a processor design that could reduce qubits’ potential to flip states, in what was pitched as a blueprint for a more precise quantum computer.